ELT Versus ETL in the Era of Cloud Databases

Posted on August 30th, 2022

ELT is emerging as a preferred approach in the era of Cloud Databases versus traditional ETL. Here's why.

Over the decades, the term "ETL" had become the standard method to move data from operational systems to a data warehouse or equivalent for analysis. First, an IT organization-led team would design a process to "extract" data from its sources, then apply various rules to "transform" the data into the shape it needed to be per the specifications of a Data Warehouse. This usually also involved a cleansing step of some kind. Then, the transformed data would be "loaded" into a warehouse. This of course usually had an IT bottleneck, with various backlogs and other efforts, making it often a difficult, time-consuming, but necessary process for an organization to be able to drive any kind of business intelligence from their data assets. Hence, the acronym "ETL" for Extract, Transform, and Load - in that order - became an industry standard approach for data-driven organizations.

Now in the Cloud Database era, 75% of databases live on the Cloud. With much less friction in data availability and far fewer costs in data storage, the trend has started to shift to an "ELT" process. In this methodology, the order of operations has changed. After data is "Extracted", it is immediately "Loaded" and available for analysis in a Cloud Data Warehouse. This gets accessibility to raw data available to users more quickly.

Once loaded, "Transformation" can begin within the Cloud Data Warehouse (or all-encompassing Data Lake, also popular). So transformed data will be readily available, albeit a little later, and also augmented with the raw data from which the transformed data originally evolved from. In the era of Data Science and Advanced Analytics, access to source data and data lineage trails can be very useful, as it can be difficult to predict prior to transformation which data will be the most applicable to a given use case.

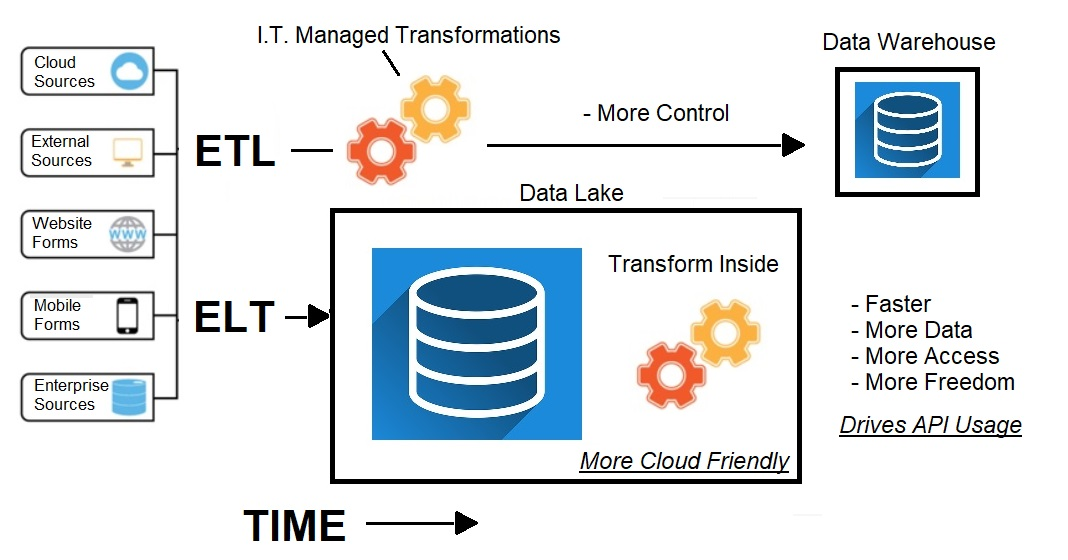

In the above diagram, you can see the various data sources from which data might be extracted from, moving as a function of time through both an ETL and ELT process. ETL does provide more control over data from the IT organization, which is useful in areas where compliance and control factors are important. However, it is still a slower process that ultimately results in more concise and less detailed data in a Data Warehouse or Data Lake.

In the bottom "ELT" scenario of the diagram, the data is more quickly available for potential analysis. There is also much more data available that could be of use in Analytics scenarios, has data lineage characteristics built-in, and can still undergo transformation processes along the way, including cleansing, summarization, and other types of data management and organization. It's like having both the book summary that students often use in educational scenarios, along with the entire book itself for reading or reference. Using both together will likely provide the richer experience and have the most impact.

These are not mutually exclusive approaches within an organization, and there is a place for both and tradeoffs to explore, but the trend in the era of the Cloud database is clearly more data faster, including raw source data and its corresponding lineage. We will continue to see ELT increase in usage versus the traditional ETL approach.

AI Interactive Data Client: Request and Receive Structured Data of Any Kind on Any Subject.

More...

Github Code Examples

More...

Generate your own Datasets: Retrieve Customized, Real-World Data on Demand as Defined by You

More...

High-Performance Batch Processing: Call our APIs with Text Files as Input.

More...

Try our Pay-as-you-Go Option

More...

Available in the AWS Marketplace.

More...

Free Trial Usage Credits

Check out our full list of AI-powered APIs

More...

Documentation and Overview

More...

Product Newsletter

More...